Understanding Neural Networks: A Comprehensive Guide

Neural networks represent one of the most transformative technologies in modern artificial intelligence. These computational models, inspired by the structure and function of biological neural networks in the human brain, have revolutionized how machines learn from data and make decisions. Understanding neural networks is essential for anyone pursuing a career in AI or machine learning, as they form the foundation for numerous applications ranging from image recognition to natural language processing.

The Biological Inspiration Behind Neural Networks

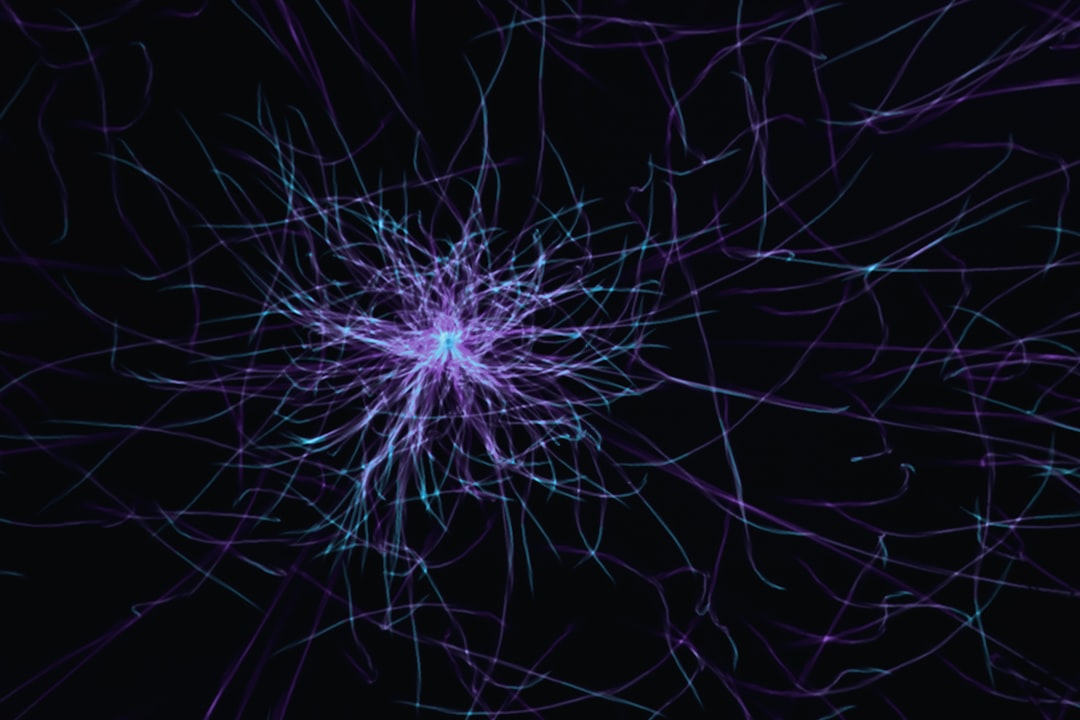

The concept of neural networks draws inspiration from neuroscience. In the human brain, neurons are interconnected cells that transmit information through electrical and chemical signals. Each neuron receives inputs from other neurons, processes this information, and sends outputs to subsequent neurons. This biological architecture inspired computer scientists to create artificial neural networks that mimic this structure using mathematical operations and computational algorithms.

Artificial neurons, also called nodes or units, are organized in layers and connected through weighted links. These weights determine the strength of connections between neurons, similar to how synaptic strengths vary in biological neural networks. During the learning process, these weights are adjusted to improve the network's performance on specific tasks.

Architecture of Neural Networks

A typical neural network consists of three main types of layers: the input layer, hidden layers, and the output layer. The input layer receives raw data, whether it be pixel values from images, numerical features from datasets, or encoded text information. This data flows through the network, undergoing transformations at each layer until reaching the output layer, which produces the final prediction or classification.

Input Layer

The input layer serves as the gateway for data entering the neural network. Each neuron in this layer corresponds to a feature in the input data. For example, in a network designed to classify handwritten digits, the input layer might contain 784 neurons, each representing one pixel in a 28x28 pixel image. The input layer does not perform any computations; it simply passes data forward to subsequent layers.

Hidden Layers

Hidden layers are where the real computational magic happens. These layers extract features and patterns from the input data through a series of mathematical transformations. Modern deep learning networks often contain many hidden layers, hence the term deep neural networks. Each hidden layer neuron receives weighted inputs from the previous layer, applies an activation function, and passes the result to the next layer.

The depth and width of hidden layers significantly impact a network's capacity to learn complex patterns. Deeper networks can learn hierarchical representations, where early layers detect simple features like edges and textures, while deeper layers combine these simple features to recognize more complex patterns like objects or faces.

Output Layer

The output layer produces the final result of the neural network's computation. The structure of this layer depends on the task at hand. For binary classification problems, the output layer might contain a single neuron producing a value between 0 and 1, indicating the probability of belonging to a particular class. For multi-class classification, the output layer typically contains one neuron per class, with activation functions like softmax ensuring the outputs can be interpreted as probabilities.

How Neural Networks Learn

Neural networks learn through a process called training, which involves adjusting the weights of connections between neurons to minimize prediction errors. This process relies on two key mechanisms: forward propagation and backpropagation.

During forward propagation, input data flows through the network from the input layer to the output layer. Each neuron computes a weighted sum of its inputs, applies an activation function, and passes the result forward. The output layer produces a prediction, which is then compared to the actual target value using a loss function that quantifies the prediction error.

Backpropagation is the algorithm used to update network weights based on the prediction error. It calculates the gradient of the loss function with respect to each weight in the network, indicating how much each weight contributed to the error. These gradients are then used by an optimization algorithm, typically gradient descent or one of its variants, to adjust the weights in a direction that reduces the error.

Activation Functions: The Non-Linear Elements

Activation functions introduce non-linearity into neural networks, enabling them to learn complex patterns that cannot be captured by linear models. Without activation functions, stacking multiple layers would be mathematically equivalent to a single linear transformation, severely limiting the network's capabilities.

Common activation functions include the sigmoid function, which maps inputs to values between 0 and 1; the hyperbolic tangent, which produces outputs between -1 and 1; and the Rectified Linear Unit (ReLU), which outputs the input if positive and zero otherwise. ReLU has become particularly popular in deep learning due to its computational efficiency and ability to mitigate the vanishing gradient problem that affects deeper networks.

Challenges and Considerations

Training neural networks presents several challenges. Overfitting occurs when a network learns the training data too well, including its noise and peculiarities, resulting in poor performance on new, unseen data. Techniques like regularization, dropout, and data augmentation help combat overfitting by preventing the network from becoming too specialized.

The vanishing gradient problem affects deep networks where gradients become extremely small as they propagate backward through many layers, making it difficult for early layers to learn effectively. Modern architectures and initialization techniques have been developed to address this issue, enabling the successful training of very deep networks.

Conclusion

Neural networks have transformed artificial intelligence by providing powerful tools for learning from data and making predictions. Understanding their architecture, learning mechanisms, and the principles underlying their operation is crucial for anyone working in AI and machine learning. As research continues to advance, neural networks are becoming increasingly sophisticated, opening new possibilities for solving complex problems across diverse domains.

At NeuroLearn Academy, we provide comprehensive training that covers these fundamental concepts and much more, preparing students to build and deploy neural networks effectively. Whether you are just starting your journey in AI or looking to deepen your expertise, understanding neural networks is an essential step toward mastering modern artificial intelligence.